Curated Content October 2023

A few pieces of content I thought were worthwhile in the month of October.

Articles

What's an Operational Definition Anyway?

A great article on Operational Definitions from Cedric Chin of Commoncog again. These are important, because otherwise data you're collecting is likely to be meaningless or unable to be compared coherently over time.

The most important takeaway is the three things that make up an operational definition:

A criterion. The thing you want to measure.

A test procedure. What process are you going to use to measure the thing?

A decision rule. How will you decide if the thing you’re looking at should be included (or excluded) from the count?

He gives a great example of a metric without an established operational definition and how an organization changed what was being measured in order to game the metric, because no operational definition had been established.

McKinsey Developer Productivity Review

Probably the single best article to read as a follow-up to the McKinsey Developer Productivity report.

Dan North gives McKinsey's report a fair, but thorough review, and notes many areas where it comes up short, as well as a number of places where he cites available research that McKinsey ought to have been able to build off of.

You'll forget most of what you learn. What should you do about that?

This one is off the beaten path, even for me, but I thought it was a really thought provoking one about learning.

The author pushes that the most important thing that you develop with regard to learning is your attitude towards it, because you'll often forget the details of things you learn, whether through the education system, work, or self education.

The students who ultimately succeed in learning R are not the ones who force themselves to memorize functions or do a bunch of coding drills. They’re the ones who accept they will feel stupid and that most of the rules will at first seem totally arbitrary, and who understand that they will gain great power if they just keep going. (Emphasis mine.)

This applies to programming, but I've found it applies just as well to getting yourself familiar with new domains.

Don't Be Beaten By Agile Cadences. Slow Down & RELAX

Despite coming from an unorthodox place to find great software content, LinkedIn, Jason Gorgman produced a great article that will be one I keep around for new folks that I bring into my organization.

Individuals that aren't used to working in small slices where code is continuously merged into the mainline and deployed every single day, multiple times per day, can struggle to adapt to a new way of writing code. This doesn't mean they're bad engineers, just that they have to learn a new way of working.

This is a reminder as you're doing that to be patient with yourself. It takes time to learn a new way of working.

Books

Rigor Mortis: How Sloppy Science Creates Worthless - Richard Harris

While well outside my personal expertise, I thought Rigor Mortis was a really interesting read on how we make a lot of missteps and have a lot of perverse incentives setup with regards to biomedical research.

It's also got some applicability into how we ought to be thinking about empirical research in software development, with regard to replicability.

Conf Talks

No conf talks this month.

Podcasts

No podcasts this month.

Microposts

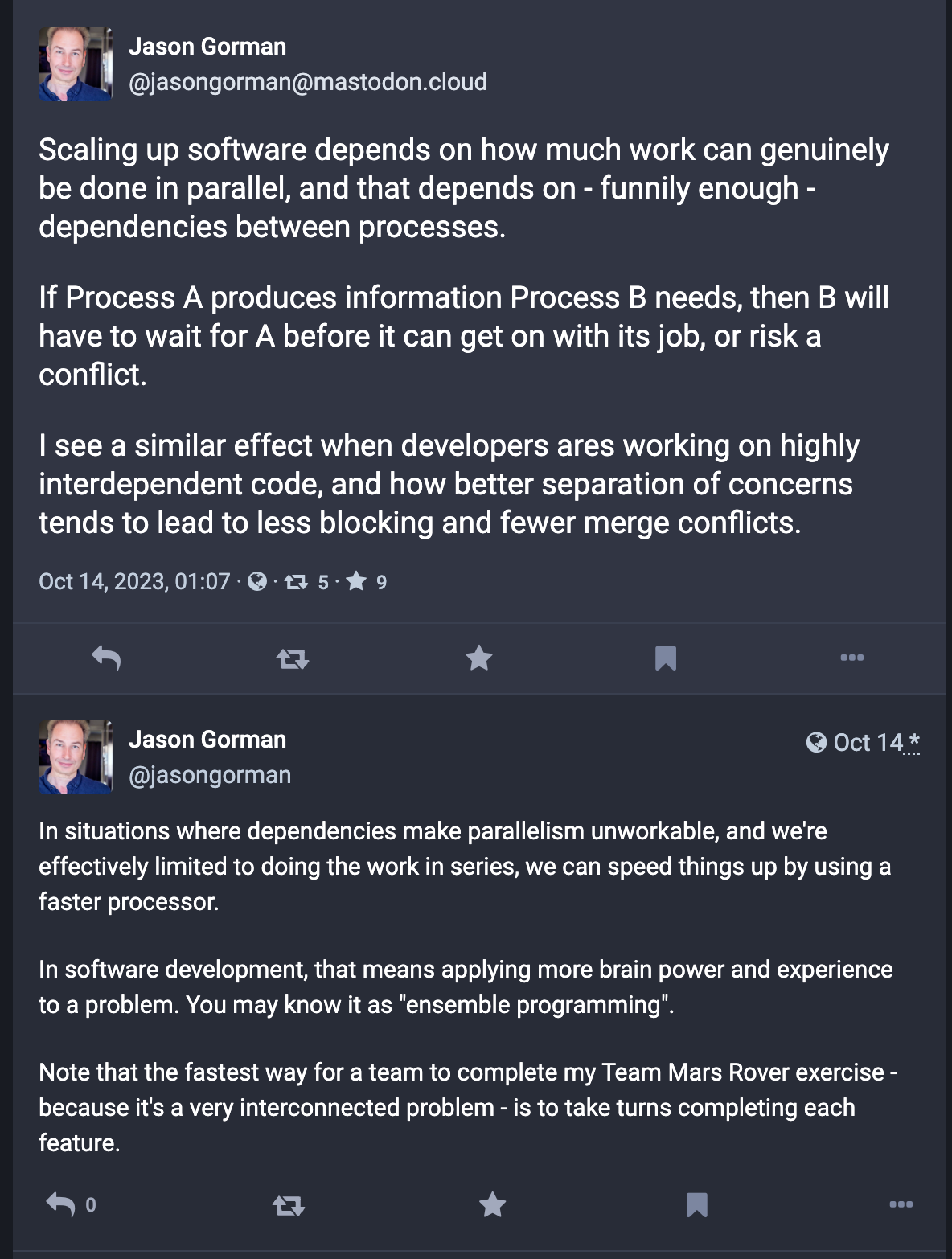

An interesting post from Jason Gorman about when different programming approaches can make sense, like mob/ensemble.

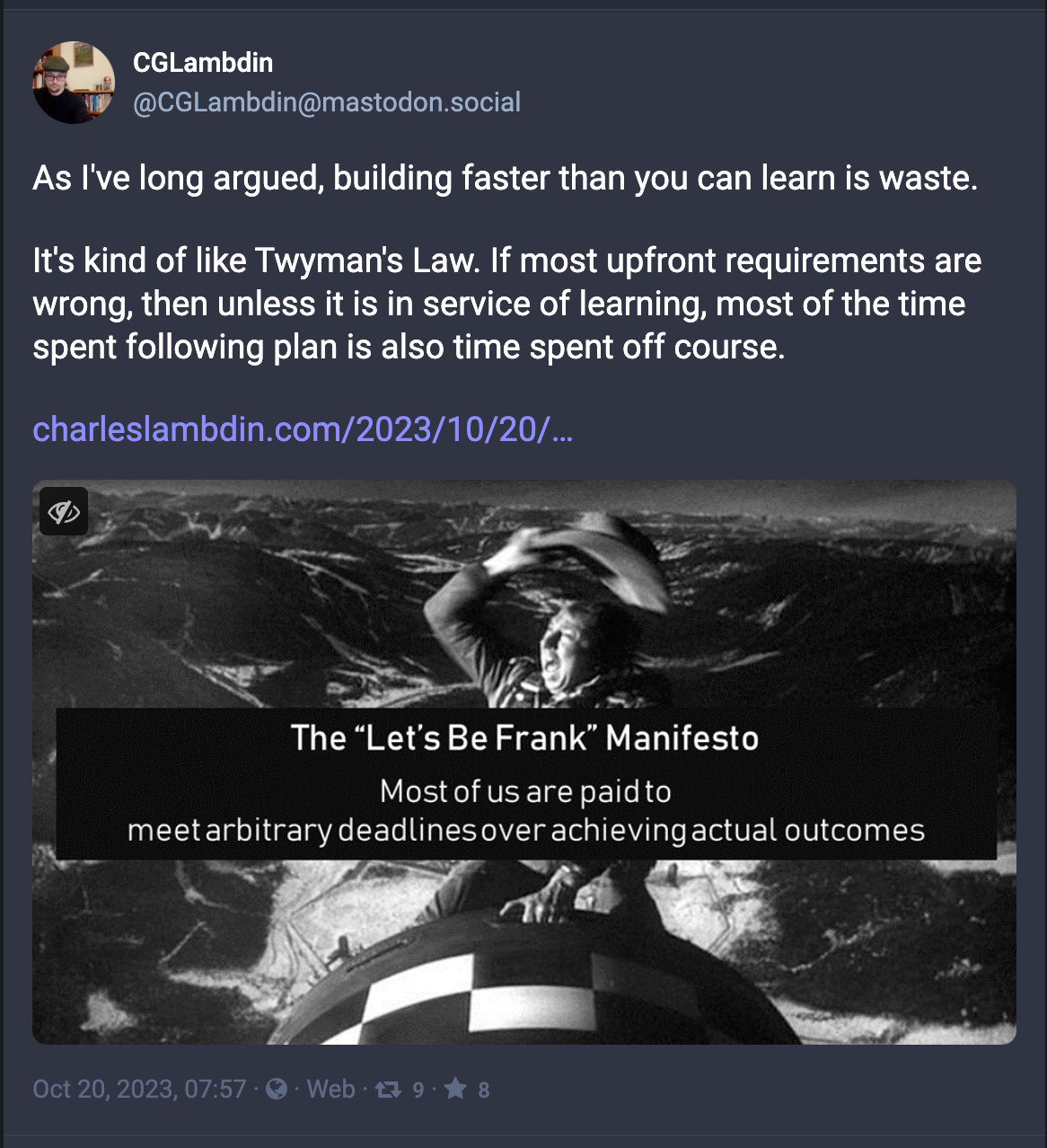

I hadn't heard of Twyman's Law before, and didn't feel like the article connected with the post as well as I would have liked, but this post really resonated with me about a key constraint in product development.